Mission to TensorFlow World

Written by Meaza Abate, Developer

TensorFlow is Google’s industry-leading machine learning platform. In anticipation of the inaugural TensorFlow World conference this past fall, the TensorFlow marketing team approached Instrument with an ask to create an in-person experience at the conference that would show off the platform’s wide range of capabilities in a novel and compelling way.

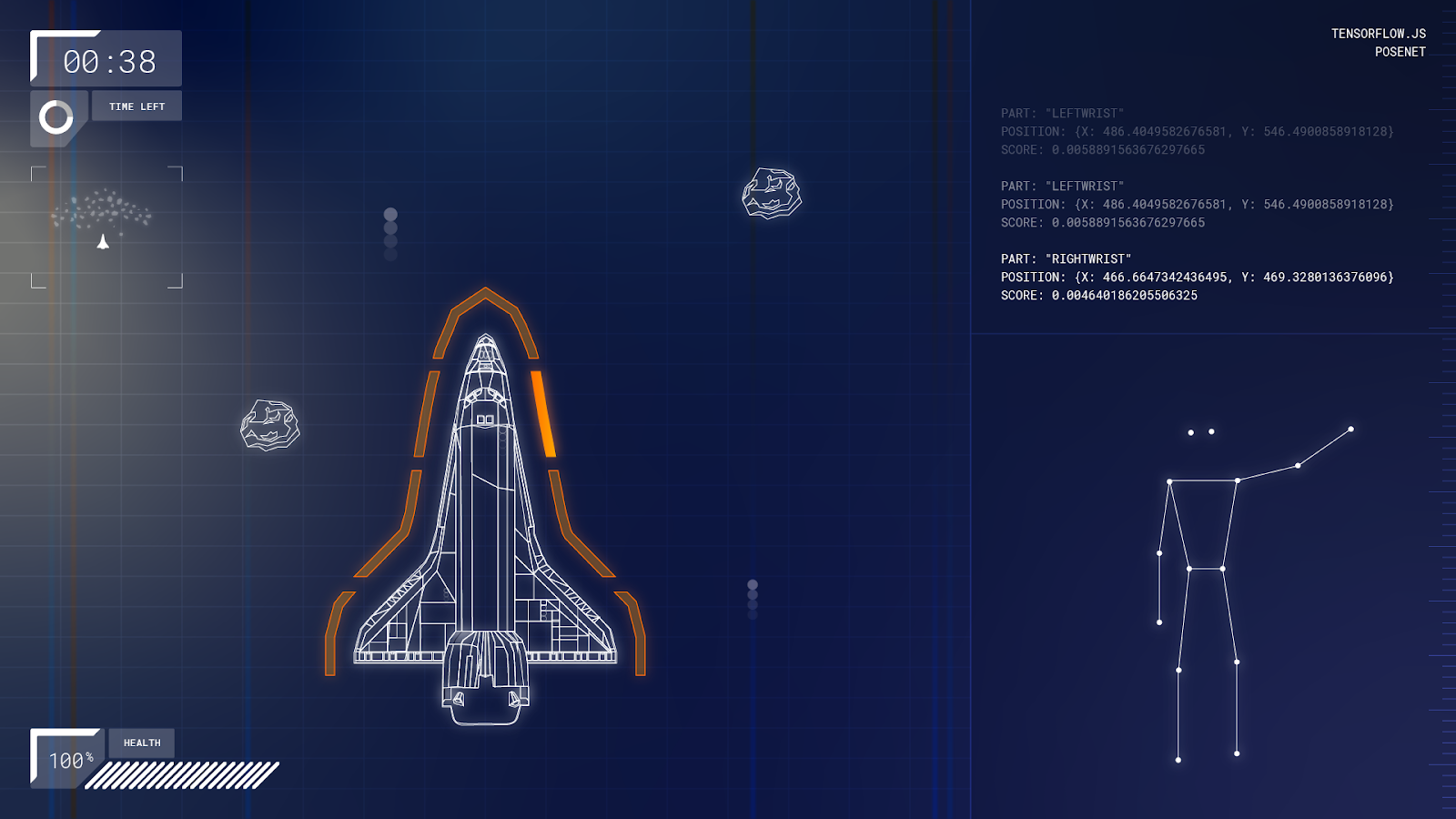

To highlight the range of TensorFlow’s machine-learning products, Instrument created a unifying theme and a visual language to support it. From there we built a series of engaging demos that told the TensorFlow story through a unique installation: a group of 3 space-themed interactive games that used TensorFlow technologies and influenced the movement of a shuttle on a large central display as it made its journey through space.

Mission to TensorFlow World is a weird and fun installation-based gaming experience geared towards machine learning. It was inspired by the 50th anniversary of the Apollo moon landing. The game’s mechanics rely on machine learning models built using various TensorFlow products. The experience highlights the power of machine learning and its tools through creative and interactive applications.

The creation of this experience was a long, laborious, and fun journey, and new territory for our team. We only had 4 months and a small core team of 8 to ideate and execute. We were asked to build an experience that could highlight the various Tensorflow products and the ecosystem that they live in while presenting the power of machine learning in a fun and approachable manner. The installations needed to work for both the TensorFlow Roadshow (Sept - Nov 2019), a series of conferences set around the world that would host no more than a couple hundred attendees each, and the first TensorFlow World Conference (Oct 31 - Nov 1 2019), an annual conference in Santa Rosa, California for thousands of machine learning enthusiasts.

The project was a delicate balancing act; it demanded a lot of work within a short timespan. We needed an experience that would emphasize the difficulty without diminishing the fun, one that would work for small travelling events and large established ones, one that made use of different platforms while showcasing the connections between them. After a lot of ideas (65 to be exact), we landed on an experience that we felt would meet all these requirements.

The result was 4 separate installations that work together to create one immersive experience. One of the installations serves as a visual piece that ties everything together. The other 3 are PoseShield, a TensorFlow.js game that uses body recognition to defend a spaceship from asteroids; Emojicules, a TensorFlow Lite game in which players mimic emojis to capture air molecules; and Startographer, a TensorFlow Core word association game that allows players to navigate through space.

This concept enabled us to fulfill all of our previously established requirements. The independent yet tandem nature of the installations showcased the different platforms as well as their compatibility. The fact that 3 of the installations were games in their own right allowed us to create an experience that could both travel the world and meet the needs of small audiences as well as large ones. In that spirit, we completed PoseShield first and sent that off to travel on the TensorFlow Roadshow. The games themselves are fun and silly, as you can probably tell by the names, but manage to keep the technology and machine learning at the forefront through signage, copy and the UI, which exposes the data.

This project was quite substantial and each installation came with its own set of challenges. If I were to write about all of them, this article would not end. For this reason, I will be focusing on PoseShield, the only one that lives on the web. You can play it right now!

Play PoseShield

PoseShield

PoseShield, as described above, is a game in which the player is asked to use their hands to protect their spaceship from incoming asteroids. It was built using TensorFlow.js and Vue.js.

The left side of the screen displays the spaceships and asteroids. The orange panels that surround the ship light up as the player moves their hands around. The panels correspond to 7 zones that are not visible to the player. The player is only able to see their skeleton along with the model’s output on the right side of the screen.

A lot of thought was put into creating this experience. Here is my best attempt to condense four weeks of learning, collaborating, and hair pulling into one article.

TLDR: Machine learning is cool. Planning out your architecture is important. Some math. Testing is vital. PoseShield is fun.

PoseNet. What is it?

PoseNet is a machine learning model that is trained to estimate the positioning of a human body. It does this by detecting 17 keypoints. It is built using TensorFlow.js and can easily be run in a browser. You can learn more about it here.

Architecture

We had about 4 weeks to develop and test PoseShield. Since the timeline was so short, we decided to keep things as modular as possible to allow for parallel work streams that wouldn’t interfere with one another.

From the onset, we knew we were going to use the PoseNet library, that we would need a system in place to handle the zones, and of course, our display (UI) layer. Given that the UI layer doesn’t need to know everything in that library, we wanted to create an architecture that allowed at least a degree of separation between the two.

Following this logic, we created a layer called ZoneDetector that would be initialized by the UI layer. We also created what we called the PoseNetAdaptor which served as a simple communication layer with the PoseNet Library. This modularity allowed us to make changes efficiently and with a minimal effect on the other layers.

Getting the Zones Right

One big challenge was figuring out how to section the 7 zones to work for every participant. We wanted this experience to be accessible to as many users as possible. Moreover, we did not want to ask individuals to step back or adjust the camera angle. Therefore, we had to create a zone mechanism that could be personalized to each user.

To start, we created predetermined zones. We split the screen into 7 zones and got to testing. We quickly found that this system made it difficult for many individuals to reach the upper and lower sections. Taller users were expected to keep their hands close to their person while shorter people had to stretch like no one’s business and often couldn’t reach the top zones.

We began looking into how we could use the other PoseNet key points to create a more dynamic zoning system. We started with the shoulders, adjusting the zones in accordance with the player’s shoulders; enabling us to move the zones vertically as necessary.

We attached the top horizontal line to the user’s shoulders, which accounted for the user’s height. We kept iterating on this idea as we tested. While we knew moving the top line was working well, we didn’t know how this should affect the other zones. In testing we noticed the range of movement different players exhibited -- some people are very animated and tend to move away from the center, squat, and jump.

After many iterations, we found the right shape, size and dynamism that we needed to make the zones accessible for all players. We moved the zones horizontally and vertically and had them squash and stretch to make sure each zone was within reach.

Ray Casting

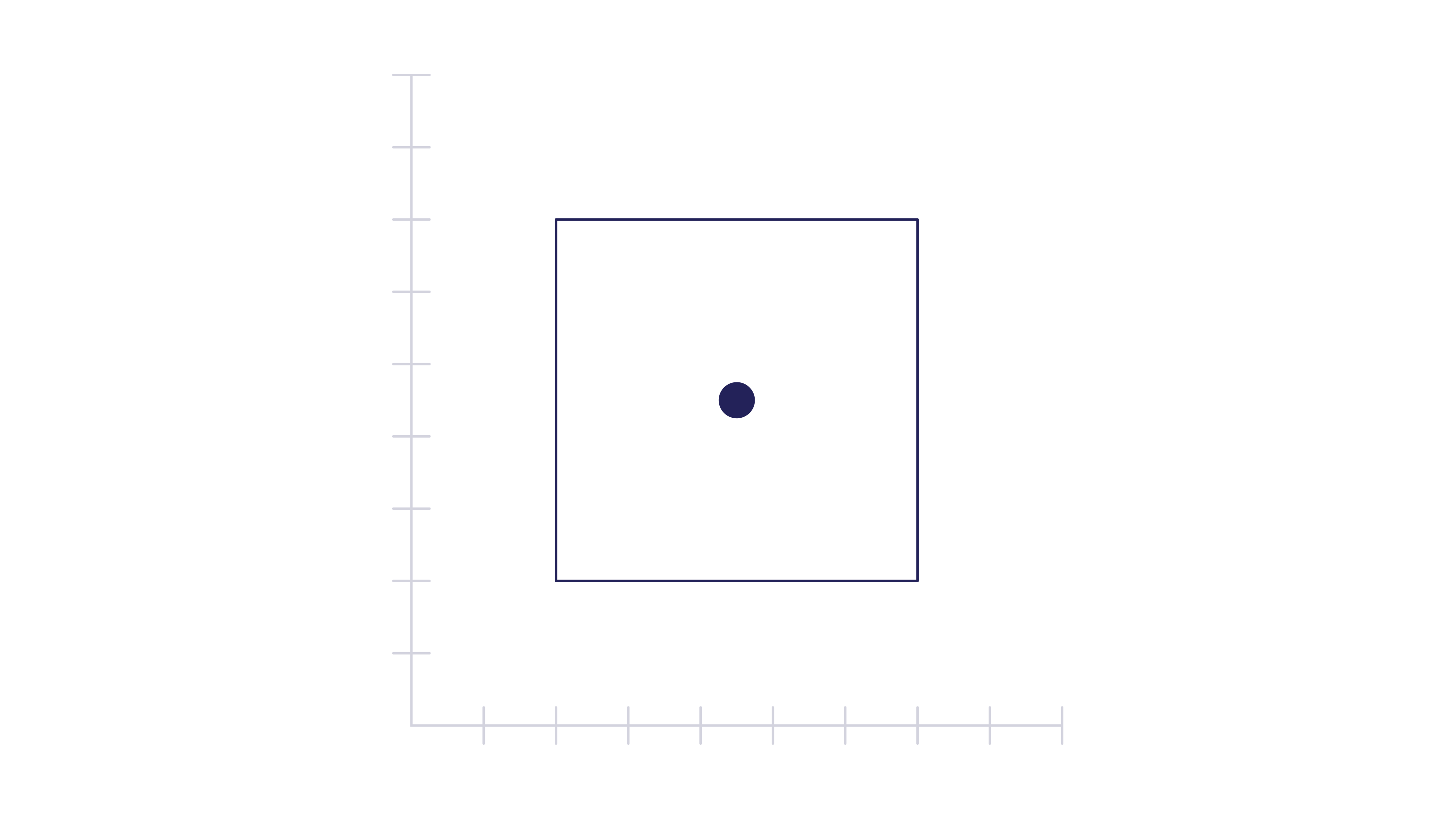

Another one of the challenges was accurately reflecting the player’s movement in the gameplay. This boiled down to being able to identify when the player’s hands were in a given zone. This might feel like a trivial problem at first glance, because it is so easy to visually discern; and when the shape is something simple, let’s say a square, this is pretty trivial.

The mathematical check that the point is within the bounds is almost as easy as the visual one. You can simply check that the point is greater than the min x and y values and less than the max x and y values.

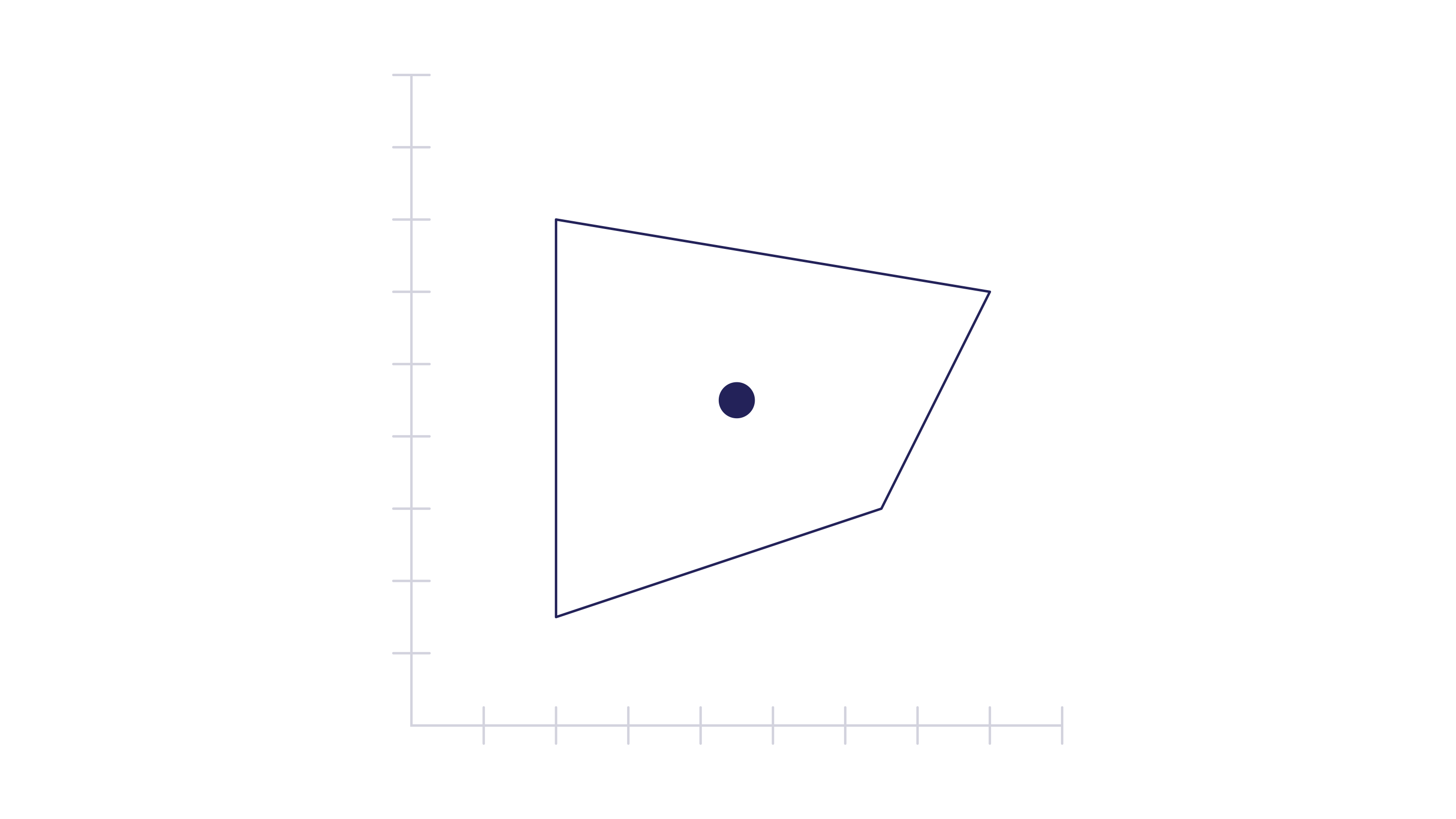

However, consider a more complicated shape like this one. The check is no longer as simple. Unlike the previous case, the min and max of y do not stay the same as the value of x changes and vice versa.

One of the ways to get around this is using ray casting. The idea is to cast a ray from the point through the shape and count how many times it intersects the shape. Odd means it’s within the bounds of the shape and even means it’s not.

As illustrated here, the point within the shape intersects the perimeter once (odd), while the one outside the shape does so twice (even) or not at all (0 is also even). There are edge cases to account for but we were not engaged in hard science and did not need to spend much time thinking about them.

Below is a function that implements ray casting. The function takes 2 arguments, an array with the coordinates of the point ([x, y]) and, an array of arrays that contain the coordinates of the points that make the polygon ([[x1, y1], [x2, y2], …]. The function loops through the vertices of the polygon and checks each one against the ray that has been cast. A boolean value is used to determine whether or not the point is inside the polygon. The value defaults to ‘false’ and simply flips with every intersection. You can easily replace this with a count and check if you ended up with an even or odd value.

isInside(point, polygon) {

const x = point.x;

const y = point.y;

let inside = false;

// last index of polygon

let j = polygon.length - 1;

// for i less than length of polygon

for (let i = 0; i < polygon.length; i++) {

// if this is not the first loop

if (i !== 0) {

j = i - 1;

}

// get the coordinates of the current line

// (side of the polygon) using i and j

const xi = polygon[i][0];

const yi = polygon[i][1];

const xj = polygon[j][0];

const yj = polygon[j][1];

// check for intersection

const intersect = ((yi > y) !== (yj > y)) &&

(x < (xj - xi) * (y - yi) / (yj - yi) + xi);

// reassign inside is not inside

if (intersect) inside = !inside;

}

return inside;

}Other Challenges

There were a host of other challenges that we faced while creating this experience. Here is a quick rundown:

Debugging

There were so many things to test that we needed to create a pretty extensive debugging system. In this pursuit, we created URL parameters that would allow us to switch between different versions of the application, the model, and the networks. It also got tiring to get up and wave your arms around for 60 seconds every time you wanted to test out the game, so we created a way to play the game using a keyboard. Clicking ‘d’ activates the debug mode and you can use the keyboard to walk through every state of the game. This feature was never disabled, so try it out.

Performance

PoseShield is not only an installation but also a web experience. As a result, we had to optimize the performance of the application to work on a range of devices. The model uses a lot of processing power and is best served to run on a powerful computer. However, the good people at TensorFlow built out this model so that it can be adjusted according to the user’s needs. Properties like the model’s input resolution, its quantization, output stride and architecture could be adjusted to find a balance between accuracy and performance. Knowing this was something we were going to want to test repeatedly, we made it possible to change these settings using url parameters (also still available on the live site). This allowed us to avoid rebuilding the site every time we wanted to make a change. We tested extensively and landed on a default mode that worked pretty well.

Model Hosting

Given that we are using existing models that live in a storage bucket somewhere, we thought about what would happen if it got moved or deleted. We also wanted to make sure that when running at a conference, our experience was not dependent on the reliability of the conference network. To account for this, we had to find a way to host the models locally. Remember the thing about being able to change the parameters on the model? Well, every permutation of these parameters served up a different model, so making this work was a little more challenging than downloading a file.

Launching the Game

Starting the game was a challenge. The only way to interact with the game was through your poses so we had to come up with a way to trigger the game that was unlikely to accidentally happen. We landed on asking players to raise their hands above their heads. This solved our immediate problem, but also made us think about this issue on a larger scale. What if we needed users to consent to something? Is raising the roof (oonts, oonts) the same as clicking an ‘I agree’ button? Just food for thought.

Testing

An experience like this needs extensive testing so we had to make plenty of time for that and had to find a variety of people who were willing to make a fool of themselves while we watched them closely. We also had to set up an elaborate physical environment to test as we developed.

‘Open Sourcing‘

To top it all off, we had to prepare the codebase for ‘open-sourcing.’ This meant we, or really just me (everyone else was writing A+ code from the get go), could not write questionable code that technically works, slap a few comments on it, and call it a day. You are welcome for all my comments.

This was a long but fulfilling project filled with highs and lows. The product you see is the result of hours of work, collaboration and learning.